This Week in AI: Addressing racism in AI picture turbines

7 min readKeeping up with a fast-moving trade Aye A tall order. So till an AI can do that for you, this is a helpful roundup of latest tales on the earth of machine studying, in addition to notable analysis and experiments we’ve not lined ourselves.

This week in AI, Google have been stopped Its AI chatbot Gemini has the power to generate photos of individuals after a piece of customers complained about historic inaccuracies. For instance, requested to depict “a Roman army”, Gemini would present an anachronistic, cartoonish group of racially numerous soldiers whereas rendering “Zulu warriors” in black.

It seems that Google – like another AI distributors, together with OpenAI – had carried out clumsy hardcoding below the hood in an try and “correct” the biases of their fashions. In response to prompts akin to “Show me only images of women” or “Show me only images of men”, Gemini refused, claiming that such photos might “contribute to the exclusion and marginalization of other genders.” Gemini have been additionally reluctant to provide photos of individuals recognized solely by their race – for instance “white people” or “black people” – because of a direct concern of “reducing individuals to their physical characteristics”.

The proper wing has seized on the bugs as proof of a “woke” agenda being pushed by the tech elite. But it does not take Occam’s razor to see the much less disgusting reality: Google has been burned by the biases of its instruments earlier than (see: classifying black individuals as gorillasUnderstanding thermal weapons within the palms of black individuals as a weaponand so on.), is so determined to keep away from historical past repeating itself that it’s manifesting a much less biased world in its image-making mannequin – irrespective of how fallacious it might be.

In her best-selling ebook “White Fragility,” anti-racism educator Robin DiAngelo writes about how erasure of race – “color blindness,” by one other phrase – contributes to systemic racial energy imbalances fairly than assuaging them. Is. By claiming to “not see color” or reinforcing the notion that merely acknowledging the struggles of individuals of different races is sufficient to name oneself “woke,” individuals make it depend DeAngelo says do hurt by avoiding any concrete protections on the topic.

Google’s ginger remedy of race-based indicators in Gemini did not keep away from the issue — however dishonestly tried to cover the mannequin’s worst biases. One might argue (and lots of have) that these biases shouldn’t be ignored or hidden, however fairly addressed within the broader context of the coaching knowledge from which they come up – that’s, society on the World Wide Web.

Yes, the information units used to coach picture turbines typically include extra white individuals than black individuals, and sure, the photographs of black individuals in these knowledge units reinforce damaging stereotypes. That’s why picture generator sexually exploiting some ladies of shade, Portray white males in positions of authority and usually favor wealthy western perspective,

Some could argue that there is no such thing as a win for AI distributors. Whether they take care of – or select to not take care of – the fashions’ biases, they are going to be criticized. And that is true. But I consider that, both approach, these fashions lack clarification – packaged in a approach that minimizes the methods their biases manifest.

If AI distributors addressed the shortcomings of their fashions in well mannered and clear language, it will go a lot additional than haphazard makes an attempt to “fix” primarily unforgivable bias. The reality is that all of us have biases – and consequently we do not deal with individuals the identical approach. Neither do the fashions we’re constructing. And we might do properly to just accept it.

Here are another AI tales price noting from the previous few days:

- Women in AI: TechCrunch launches a sequence highlighting notable ladies within the subject of AI. learn checklist Here,

- Stable Spread v3: Stabilization AI has introduced Stabilization Diffusion 3, the newest and strongest model of the corporate’s image-generating AI mannequin primarily based on a brand new structure.

- Chrome will get GenAI: Google’s new Gemini-powered device in Chrome permits customers to rewrite present textual content on the net or generate one thing fully new.

- Black over ChatGPT: Creative promoting company McKinney creates a quiz sport to make clear AI bias, Are You Blacker Than ChatGPT? have developed.

- Demand for legal guidelines: Hundreds of AI veterans signed a public letter earlier this week calling for anti-deepfake laws within the US

- Match made in AI: OpenAI has a brand new buyer in Match Group, proprietor of apps together with Hinge, Tinder and Match, whose workers will use OpenAI’s AI expertise to finish work-related duties.

- DeepMind Security: Google’s AI analysis division, DeepMind, has created a brand new group, AI Safety and Alignment, made up of present groups engaged on AI security, but additionally increasing to incorporate new, specialised teams of GenAI researchers and engineers. Has been completed

- Open Model: Barely every week after launching its newest model gemini mannequinGoogle launched Gemma, a brand new household of light-weight opensource fashions.

- House Task Force: The US House of Representatives establishing a activity power on AI – as DeWine writes – looks like a punt after years of indecision that reveals no signal of ending.

More Machine Learning

AI fashions appear to know loads, however what do they actually know? Well, the reply is nothing. But for those who phrase the query a bit otherwise… it seems that they’ve internalized some “senses” which are much like people. Although no AI really understands what a cat or a canine is, might it have some sense of similarity within the embeddings of these two phrases that’s completely different from cat and bottle? Amazon researchers consider so.

Their analysis in contrast the “trajectories” of comparable however completely different sentences, akin to “The dog barked at the thief” and “The thief caused the dog to bark”, with grammatically comparable however completely different sentences, akin to “A cat barked all day sleeps” and “A girl jogs all afternoon.” They discovered that those who people discover comparable are perceived as extra internally comparable regardless of being grammatically completely different, and the reverse is true for these which are grammatically comparable. OK, I notice that this paragraph was a bit complicated, however suffice it to say that the meanings encoded in LLM seem like extra strong and complex than anticipated, not fully naive.

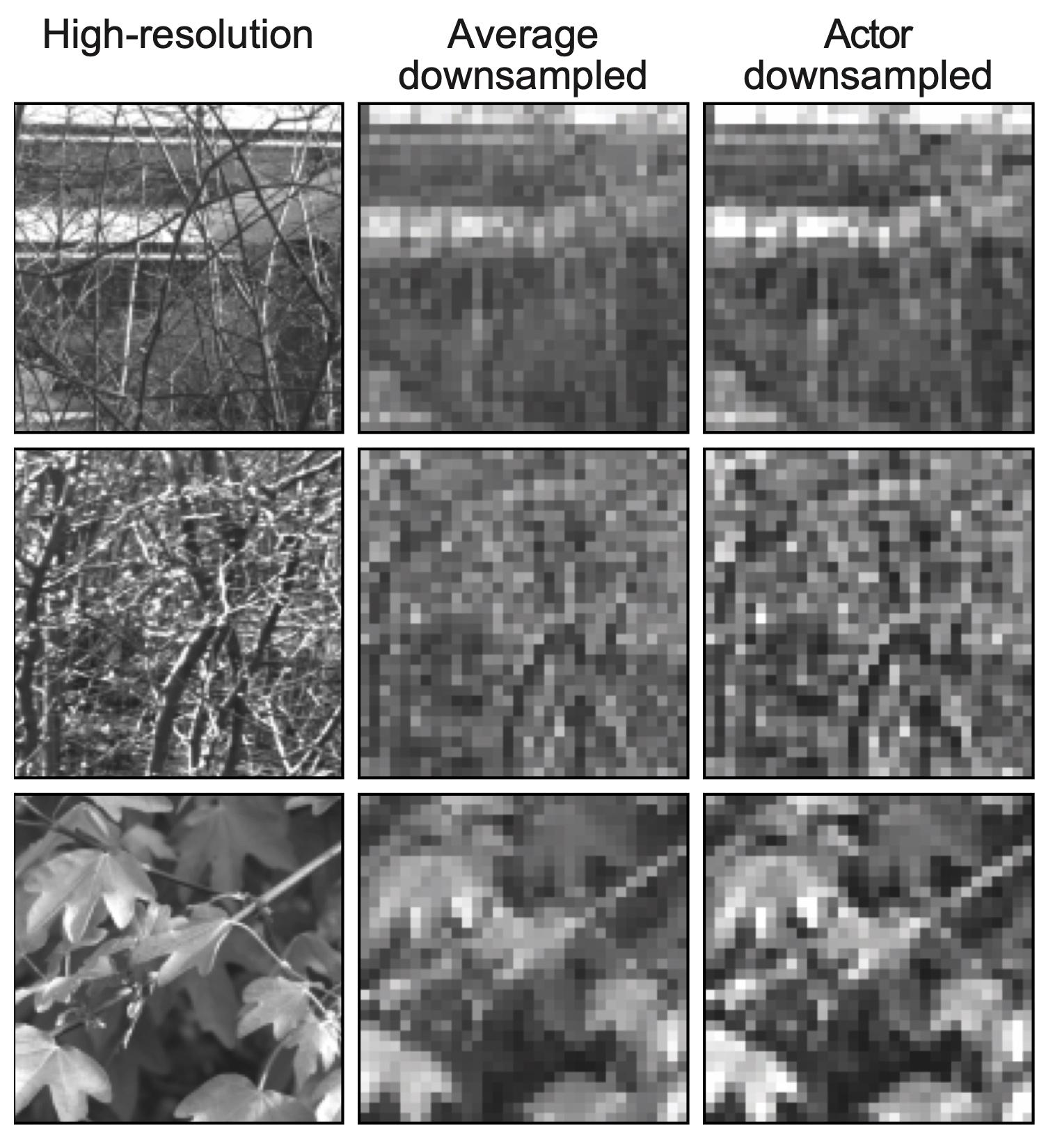

Neural encoding is proving helpful in synthetic imaginative and prescient, Swiss researchers from EPFL have discovered, Artificial retinas and different strategies of changing components of the human visible system usually have very restricted decision as a result of limitations of microelectrode arrays. So irrespective of how detailed the picture is coming in, it should be transmitted with very low constancy. But there are completely different strategies of downsampling, and this group discovered that machine studying does an excellent job of doing it.

Image Credit: EPFL

“We discovered that if we utilized a learning-based method, we bought higher outcomes when it comes to optimized sensory encoding. But what was extra stunning was that once we used an unsupervised neural community, it discovered to imitate elements of retinal processing by itself, Diego Ghezzi stated in a information launch. It mainly does perceptual compression. They examined it on mouse retinas, so it isn’t simply theoretical.

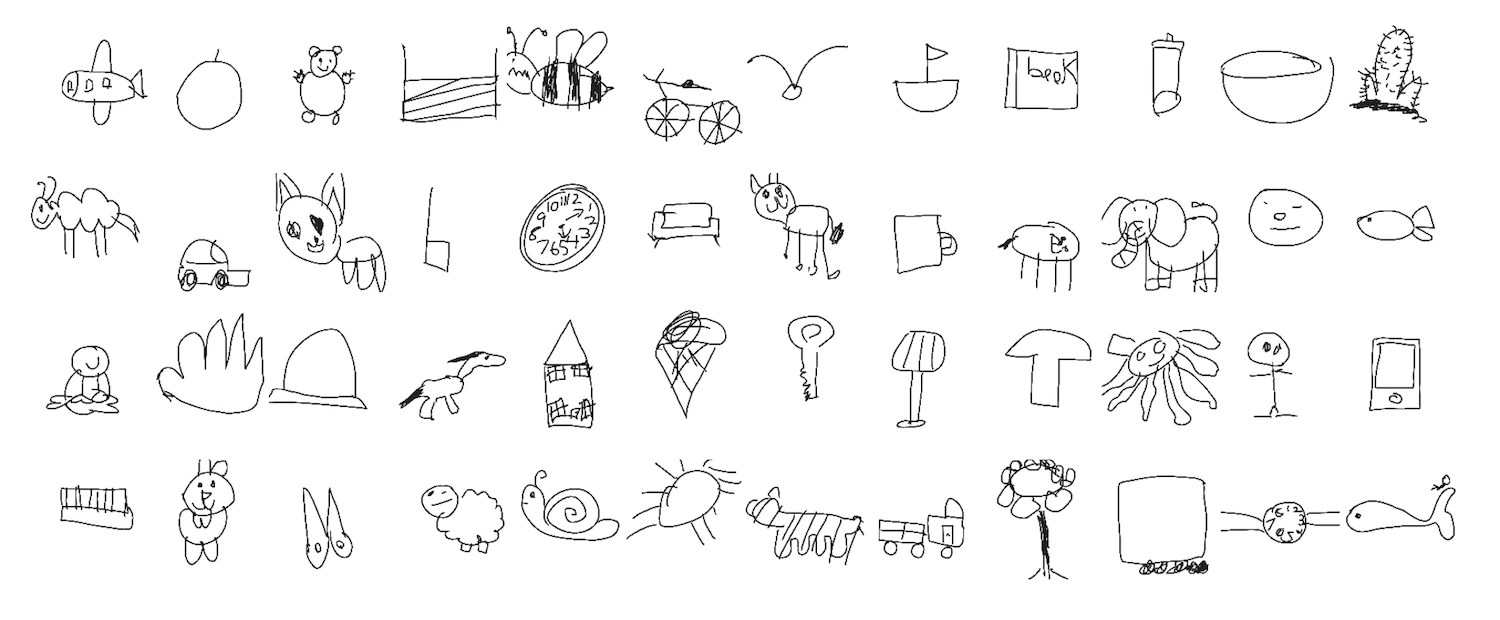

An fascinating utility of pc imaginative and prescient by Stanford researchers factors to a thriller in how youngsters develop their drawing abilities. The group requested and analyzed 37,000 drawings made by youngsters of varied objects and animals, and in addition (primarily based on the youngsters’s responses) how recognizable every drawing was. Interestingly, it was not simply the inclusion of distinctive options, akin to rabbit ears, that made the photographs extra recognizable by different youngsters.

“The sorts of options that make older youngsters’s drawings recognizable don’t seem like pushed by a single characteristic that each one older youngsters be taught to incorporate of their drawings. It’s one thing extra complicated that these machine studying programs are adopting,” stated lead researcher Judith Fan.

Chemist (additionally in EPFL) met LLMs are surprisingly adept at serving to of their work even after minimal coaching. This is not only doing the chemistry immediately, however working correctly on a physique of labor that chemists individually can not probably know the whole lot about. For instance, hundreds of papers could include just a few hundred statements about whether or not a high-entropy alloy is single or a number of section (you needn’t know what which means – they know). The system (primarily based on GPT-3) might be skilled on all these sure/no questions and solutions, and is quickly in a position to attract conclusions from it.

This is just not an enormous advance, simply proof that LLM is a great tool on this sense. “The point is that it’s as simple as doing a literature search, which works for many chemical problems,” stated researcher Bernd Smit. “Querying the underlying model may become a routine way to bootstrap a project.”

Last, A phrase of warning from Berkeley researchersHowever, now that I’m studying the submit once more I believe EPFL was additionally concerned on this. Go to Lausanne! The group discovered that imagery discovered by means of Google was extra prone to implement gender stereotypes for sure jobs and phrases than textual content mentioning the identical factor. And in each circumstances there have been numerous males current.

Not solely that, however in a single experiment, they discovered that individuals who checked out photos as a substitute of studying textual content whereas researching a task extra reliably related these roles with a gender, even days later. Too. “It’s not just about the frequency of gender bias online,” stated researcher Douglas Guilbault. “Part of the story here is that there is something very sticky, very powerful about the representation of images of people that is not in the text.”

With issues just like the Google Image Generator variety controversy happening, it is simple to miss the established and infrequently verified undeniable fact that the supply of information for a lot of AI fashions reveals critical bias, and that this bias has actual results on individuals.