India, grappling with election misinformation, is contemplating labels and its personal AI safety coalition

5 min readIndia, in the long term in terms of enamel Co-opting expertise to steer the general publicIt has grow to be a worldwide hotspot in terms of how AI is getting used and misused in political discourse and particularly the democratic course of. Tech corporations, which first created the units, are touring to the nation to push options.

Earlier this yr, Andy Parsons, a senior director at Adobe who oversees its participation within the cross-industry Content Authenticity Initiative (CAI), stepped into the whirlpool when he visited the nation with media and tech organizations. Traveled to India for. Promote instruments that may be built-in into content material workflows to determine and flag AI content material.

He mentioned in an interview, “Instead of making an attempt to determine what’s faux or manipulated, we as a society, and that is a global concern, ought to begin declaring authenticity, which implies if “Consumers should know if something is generated by AI.”

Parsons mentioned some Indian corporations – not at the moment a part of the Munich AI election safety settlement Put signature on In February OpenAI, led by Adobe, Google and Amazon – intends to type the same alliance within the nation.

“Making legal guidelines is a really sophisticated factor. It is tough to imagine that the federal government will legislate accurately and shortly in any jurisdiction. It is healthier for the federal government to take a really regular stance and take its time,” he mentioned.

Testing instruments are famously inconsistent, however they are a begin towards fixing some issues, or so the logic goes.

“The concept is already well understood,” he mentioned throughout his go to to Delhi. “I’m serving to to boost consciousness that the instruments are additionally prepared. This isn’t just an thought. It’s one thing that is already deployed.”

Andy Parsons, senior director of Adobe. Image Credit: Adobe

CAI – which promotes royalty-free, open requirements for figuring out whether or not digital content material was generated by a machine or a human – predated the prevailing hype round generative AI: it was based in 2019 and now consists of Microsoft, There are 2,500 members together with Meta and Google. , The New York Times, The Wall Street Journal and the BBC.

Just as there may be an {industry} rising across the enterprise of leveraging AI to create media, there may be additionally a small {industry} being created to attempt to good a few of its extra nefarious purposes.

So in February 2021, Adobe went a step additional in creating a kind of requirements and co-founded the Coalition for Content Provisioning and Authenticity (C2PA) with ARM, BBC, Intel, Microsoft, and Truepic. The objective of the coalition is to develop an open commonplace that uncovers the provenance of pictures, video, textual content, and different media by tapping into their metadata and telling folks in regards to the origin of the file, the place and time of its creation, and Was it modified earlier than arriving? the consumer. CAI works with C2PA to advertise the usual and make it out there to the general public.

It is now actively partaking with governments like India to undertake that commonplace to uncover the origin of AI content material and take part with authorities in growing tips for the development of AI.

Adobe has nothing to lose by taking an lively function on this sport. It’s not but buying or constructing large language fashions of its personal, however as the house of apps like Photoshop and Lightroom, it is the market chief in instruments for the artistic neighborhood, and so not solely is it introducing new merchandise like Firefly. is constructing AI content material basically, however incorporating legacy merchandise with AI. If the market evolves as some consider it’ll, AI shall be important if Adobe needs to remain on high. If regulators (or frequent sense) have their method, Adobe’s future could rely on how profitable it’s in guaranteeing that what it sells does not contribute to the mess.

In any case the large image of India is admittedly tousled.

Google targeted on India as a testing floor for the way Frequent use of its generic AI instrument Gemini When it involves election materials; there are events Weaponizing AI creating memes that includes opponents’ likenesses; Meta has arrange a deepfake”helpline“For WhatsApp, such is the popularity of the messaging platform in spreading AI-powered messages; And at a time when countries are becoming concerned about AI safety and what they need to do to ensure it, we have to see what impact this will have on the Government of India A call shall be taken in March on enjoyable guidelines on how new AI fashions are created, examined and deployed. This is after all meant to encourage extra AI exercise at any price.

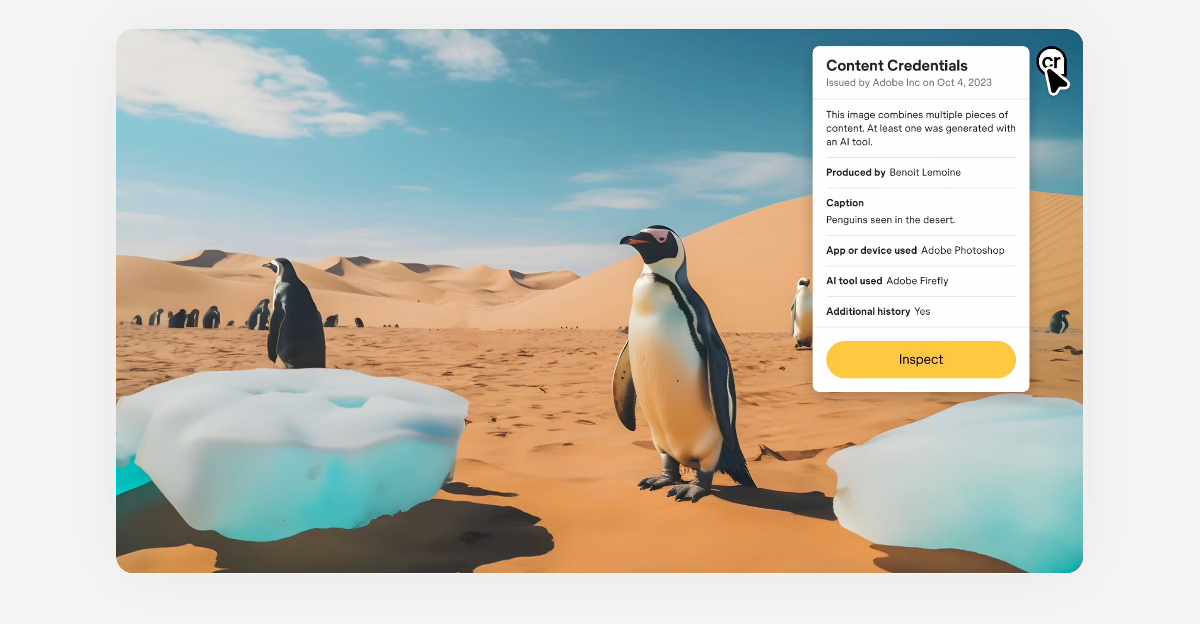

Using its open commonplace, C2PA has developed a digital diet label for substances materials certificates, CAI members are working to deploy digital watermarks on their content material so customers can know its origin and whether or not it’s AI-generated. Adobe has content material credentials throughout all of its artistic instruments, together with Photoshop And lightroom, It additionally routinely connects to AI content material Adobe’s AI mannequin created by Firefly, final yr, leica launched its digital camera With Content Credentials built-in, and Microsoft added Content Credentials to all AI-generated pictures Created utilizing Bing Image Creator.

Image Credit: materials certificates

Parsons instructed TechCrunch that CAI is speaking with international governments on two areas: one to assist promote the usual as a global commonplace, and the opposite is to get it adopted.

“In an election year, it is especially important for candidates, parties, existing offices and administrations who release material to the media and the public at all times to ensure that if something is released from PM (Narendra) Modi’s office Actually, it is from PM Modi’s office. There have been many incidents where this is not the case. So, understanding whether something is actually authentic is very important for consumers, fact-checkers, platforms and intermediaries,” he mentioned.

He mentioned curbing misinformation has grow to be difficult because of India’s giant inhabitants, huge language and demographic range and voted in favor of easier labels to cut back it.

“It’s a little ‘CR’ … it’s two Western letters like most Adobe tools, but it indicates there’s more context to be shown,” he mentioned.

There is ongoing controversy over what the actual problem is perhaps behind tech corporations supporting any kind of AI safety measure: whether or not it’s truly about existential concern, or just to offer the looks of existential concern. Is it about having a seat on the desk, guaranteeing that their pursuits are protected within the rule-making course of?

“This is not generally controversial with the companies that are involved, and all the companies that recently signed the Munich Agreement, including Adobe, that came together, gave up competitive pressure because these ideas are something that we all needs to be done,” he mentioned in protection of the work.

(TagstoTranslate)Adobe(T)AI(T)Europe(T)Exclusive(T)India(T)US