This week in AI: Let’s not neglect the standard information annotator

6 min readKeeping up with a fast-moving trade aye A tall order. So till an AI can do that for you, here is a useful roundup of latest tales on the earth of machine studying, in addition to notable analysis and experiments we have not lined ourselves.

This week in AI, I’d prefer to give attention to labeling and annotation startups – startups like Scale AI, that’s. Allegedly Talks are underway to lift contemporary funds at a valuation of $13 billion. Labeling and annotation platforms can’t ignore thrilling new generative AI fashions like OpenAI’s Sora. But they’re essential. Without them, fashionable AI fashions would arguably not exist.

The information on which a number of fashions are educated needs to be labeled. Why? Labels, or tags, assist the mannequin perceive and interpret the information through the coaching course of. For instance, labels to coach picture recognition fashions can take the type of markings round objects, “bounding binsOr captions that reference every individual, place, or object depicted in a picture.

The accuracy and high quality of labels have a big affect on the efficiency – and reliability – of educated fashions. And annotation is a big endeavor, requiring hundreds to hundreds of thousands of labels for the bigger and extra subtle information units in use.

So you’ll assume that information annotators can be handled nicely, paid a dwelling wage and given the identical advantages that the engineers who construct fashions get. But typically, the alternative is true – a product of the brutal working situations that many annotation and labeling startups promote.

Companies like OpenAI have been trusted with billions in banks Interpreters in third world nations have been paid only some {dollars} per hour, Some of those annotators are uncovered to extremely disturbing materials resembling graphic imagery, but they don’t seem to be given day without work (as they’re often contractors) or entry to psychological well being sources.

an impressive Piece Scale AI was completely unveiled at NY Mag, which recruited annotators in far-flung nations like Nairobi and Kenya. Some duties at Scale AI require labelers to work a number of eight-hour workdays – no breaks – and receives a commission as little as $10. And these employees are beholden to the whims of the platform. Annotators generally go lengthy intervals with out receiving work, or are unceremoniously fired from Scale AI – as occurred with contractors in Thailand, Vietnam, Poland, and Pakistan. not too long ago,

Some annotation and labeling platforms declare to offer “fair-trade” features. In reality they’ve made it a central a part of their branding. But as Kate Kay of MIT Tech Review notesThere are not any guidelines, solely weak trade requirements for what moral labeling work means – and corporations’ personal definitions range extensively.

so what to do? Barring huge technological developments, the necessity to annotate and label information for AI coaching will not be going away. We can hope for platforms to self-regulate, however a extra real looking resolution seems to be coverage making. This is a troublesome prospect in itself – however I consider it’s the finest probability we now have to vary issues for the higher. Or no less than beginning to.

Here are another AI tales price noting from the previous few days:

-

- OpenAI creates a voice cloner: OpenAI is previewing a brand new AI-powered instrument it developed, Voice Engine, which permits customers to clone a voice from a 15-second recording of somebody talking. But the corporate is selecting to not launch it extensively (but), citing dangers of abuse.

- Amazon doubles down on Anthropic: Amazon invests one other $2.75 billion in rising AI energy Anthropic The choice he left open final September,

- Google.org launches an accelerator: Google.org, Google’s charitable arm, is launching a brand new $20 million, six-month program to assist fund nonprofits growing know-how that leverages generative AI.

- A New Model Architecture: AI startup AI21 Labs has launched a generative AI mannequin, Jamba, that employs a novel, new(ish) mannequin structure – state area fashions or SSM – to enhance effectivity.

- Databricks launches DBRX: In different mannequin information, Databricks this week launched DBRX, a generative AI mannequin much like OpenAI’s GPT sequence and Google’s Gemini. The firm claims to attain state-of-the-art outcomes on a number of common AI benchmarks, together with a number of measuring logic.

- Uber Eats and UK AI regulation: Natasha writes how the Uber Eats courier’s combat in opposition to AI bias reveals that justice is hard-won below UK AI guidelines.

- EU election safety steerage: The European Union revealed draft election safety pointers on Tuesday, aimed round two dozen Regulated platforms below The Digital Services Act, which incorporates pointers associated to stopping content material advice algorithms from spreading generative AI-based disinformation (aka political deepfakes).

- Grok acquired upgraded: X Grock Chatbot will quickly get a sophisticated constructed -in mannequin, Groke -1.5 – in addition to all of the premium clients of X achieve entry To Grok. (Grok was beforehand unique to X Premium+ clients.)

- Adobe expands Firefly: This week, Adobe unveiled Firefly Services, a set of over 20 new generative and inventive APIs, instruments, and providers. It additionally launched Custom Models, which permits companies to fine-tune the Firefly mannequin primarily based on their property – part of Adobe’s new ZenStudio Suite.

More Machine Learning

How is the climate? AI is more and more capable of inform you this. I observed some efforts Hourly, weekly and century-scale forecasts Just a few months in the past, however like all issues AI, the sector is shifting ahead quickly. The groups behind MetaNet-3 and GraphQL have revealed a paper describing a brand new system SeedFor scalable ensemble envelope diffusion samplers.

Animation exhibiting how extra predictions create a extra uniform distribution of climate predictions.

SEEDS makes use of propagation to generate “ensembles” of attainable climate outcomes for a area primarily based on inputs (radar readings or orbital imagery maybe), in comparison with physics-based fashions. With bigger group numbers, they will cowl extra edge circumstances (resembling an occasion that solely happens in 1 in 100 attainable situations) and be extra assured about extra seemingly conditions.

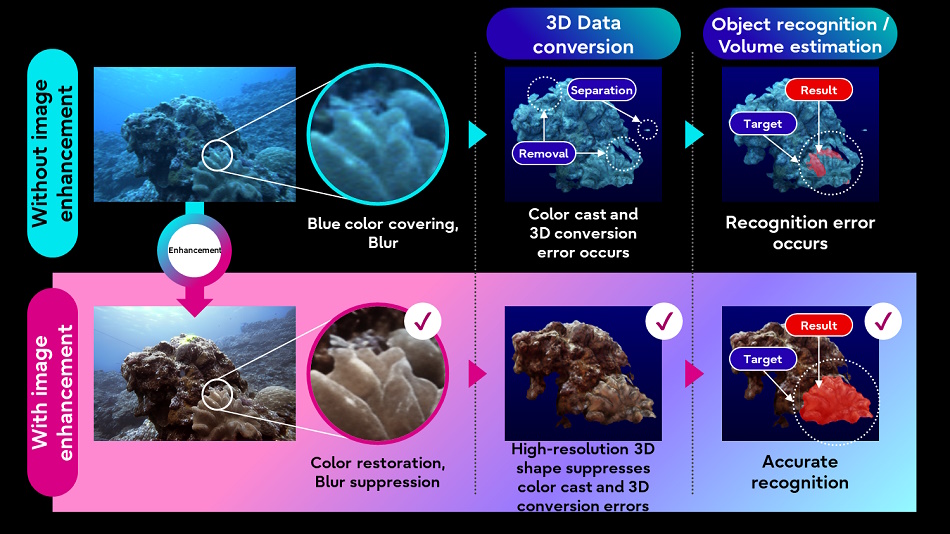

Fujitsu can also be hoping to higher perceive the pure world Applying AI picture administration methods to underwater imagery and lidar information collected by underwater autonomous autos. Improving the standard of images will enable different, much less subtle processes (resembling 3D transformation) to work higher on the goal information.

Image Credit: Fujitsu

The concept is to create a “digital twin” of water that may assist simulate and predict new developments. We’re a great distance from that, however you have to begin someplace.

Among LLMs, researchers have discovered that they mimic intelligence in a good less complicated manner than anticipated: linear features. Frankly the mathematics is past me (vector stuff in lots of dimensions) This article is in MIT This makes it very clear that the recall mechanism of those fashions is fairly… fundamental.

Even although these fashions are actually advanced, non-linear features which might be educated on a whole lot of information and are very onerous to know, generally there are actually easy mechanisms at work inside them. This is an instance of that,” said co-lead author Ivan Hernandez. If you are more technically aware, View paper right here,

One manner these fashions fail is by not understanding the context or response. Even a very competent LLM cannot “get it” should you inform them your title is pronounced a sure manner, as a result of they do not actually know or perceive something. In circumstances the place this can be essential, resembling human-robot interactions, it could distract individuals if the robotic acts this manner.

Disney Research has been trying into automated character interactions for a very long time, and Say this title and reuse the paper Seen only a second in the past. This appears apparent, however when somebody introduces themselves it is a sensible method to take out the tone of voice and encode it as a substitute of simply the written title.

Image Credit: disney analysis

Finally, as AI and search overlap an increasing number of, it’s price reevaluating how these instruments are used and whether or not any new dangers are offered by this unholy union. Safiya Umoja Noble has been an essential voice in AI and search ethics for years, and her opinions have at all times been enlightening. He had an important interview with the UCLA information crew About how her work has advanced and why we have to stay detached with regards to bias and dangerous habits in analysis.