Armila needs to provide corporations a guaranty for AI

5 min readLoads can go incorrect with GenAI – particularly with third-party GenAI. It makes stuff. This is biased and poisonous. And it might violate copyright guidelines. According to a current survey by MIT Sloan Management Review and Boston Consulting Group, third-party AI instruments are liable for greater than 55% of AI-related failures in organizations.

So it is no shock that some corporations are nonetheless cautious of adopting the know-how.

But what if GenAI got here with a guaranty?

This enterprise thought got here to Karthik Ramakrishnan, an entrepreneur and electrical engineer, a number of years in the past whereas working as a senior supervisor at Deloitte. He co-founded two “AI-first” corporations, Gallup Labs and Blue Trumpet, and ultimately realized that belief – and with the ability to measure danger – was hindering AI adoption.

“Right now, almost every enterprise is looking for ways to implement AI to increase efficiency and keep up with the market,” Ramakrishnan instructed TechCrunch in an e-mail interview. “To do that, many are turning to third-party distributors and implementing their AI fashions and not using a full understanding of the standard of the merchandise… AI is shifting at such a quick tempo that the dangers and “Disadvantages are always evolving.”

So Ramakrishnan teamed up with Dan Adamson, an knowledgeable in search algorithms and two-time startup founder, to begin Armila AIWhich offers guarantee on AI fashions to company prospects.

You could also be questioning if Armilla can do that, given that almost all fashions are black packing containers or in any other case locked behind licenses, subscriptions, and APIs? I had the identical query. Ramakrishnan had the reply, by benchmarking – and a cautious method to buyer acquisition.

Armila takes a mannequin – whether or not open supply or proprietary – and evaluates it to “verify its quality,” knowledgeable by the worldwide AI regulatory panorama. The firm assessments for issues like nightmareRacial and gender bias and equity, common robustness and safety throughout a variety of theoretical purposes and use circumstances, leveraging its in-house analysis know-how.

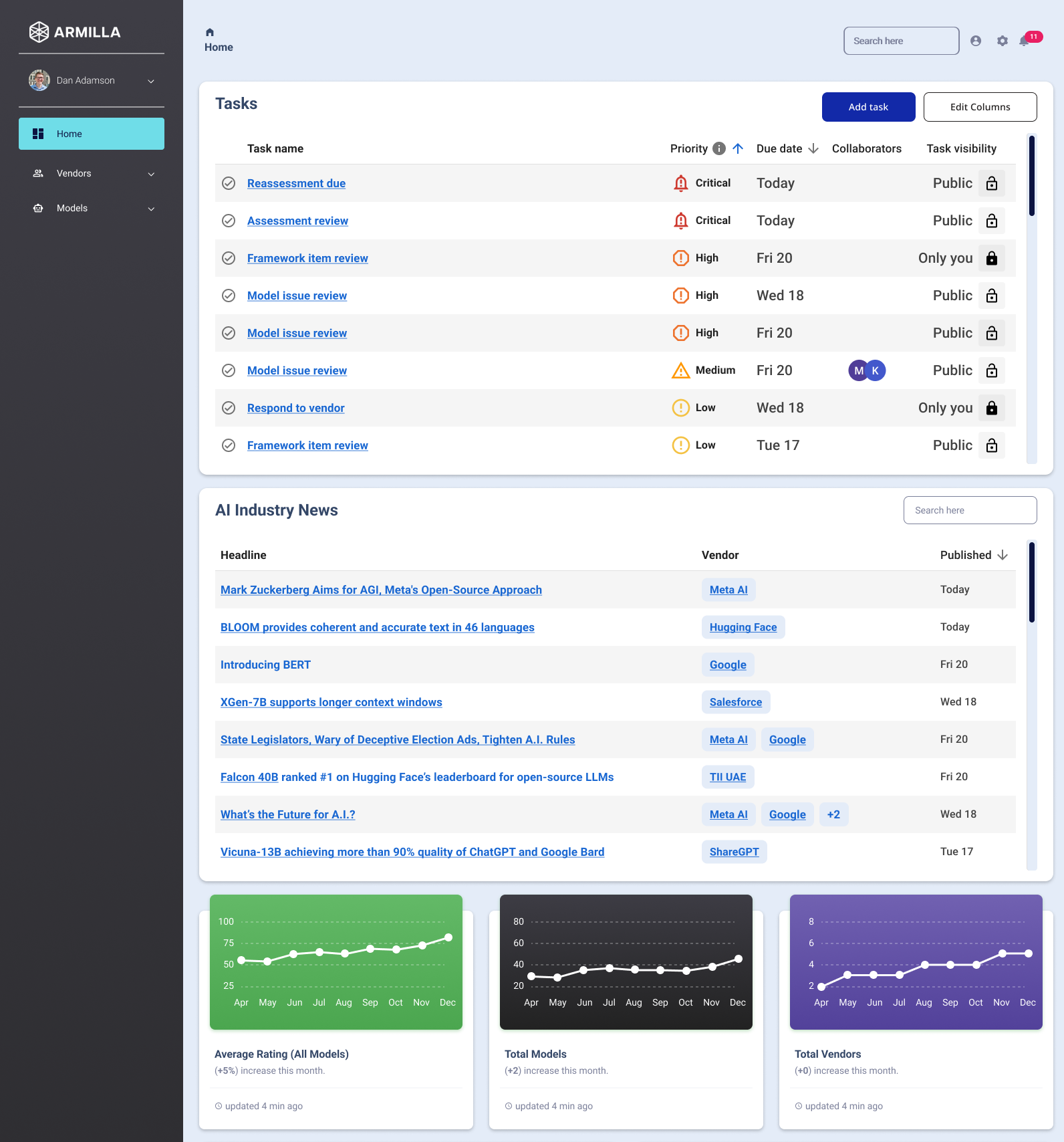

Image Credit: Vest

If the mannequin passes muster, Armilla backs it with its guarantee, which reimburses the customer of the mannequin for any charges paid to make use of the mannequin.

“What we really offer enterprises is confidence in the technology they’re purchasing from third-party AI vendors,” Ramakrishnan mentioned. “Enterprises can come to us and have us consider the distributors they wish to use. “Just like they do penetration testing for new technology, we do penetration testing for AI.”

By the best way, I requested Ramakrishnan, is there any mannequin of Armilla No Test for moral causes – say facial recognition algorithms from a vendor recognized to do enterprise with questionable actors. He mentioned:

“It wouldn’t solely be in opposition to our ethics, however in opposition to our enterprise mannequin, which relies on belief, to provide assessments and studies that present false confidence in AI fashions which might be problematic for the client and society. From a authorized perspective, we aren’t going to take prospects for fashions which might be restricted by the EU, which might be banned, which is the case for instance with some facial recognition and biometric classification methods – however such ‘High danger’ class outlined by purposes that fall into i’ve actYes.”

Now, the idea of guarantee and coverage protection for AI isn’t new – a indisputable fact that shocked this author, frankly. Last yr, Munich Re launched an insurance coverage product, AISure, designed to guard in opposition to losses from probably unreliable AI fashions by working fashions by benchmarks just like Armilla. Out of guarantee, a Increasing Many distributors, together with OpenAI, Microsoft, and AWS, present protections associated to copyright infringement which will come up from the deployment of their AI instruments.

But Ramakrishnan claims that Armila’s method is exclusive.

“Our assessments touch many areas, including KPIs, processes, performance, data quality, and qualitative and quantitative criteria, and we do it at a fraction of the cost and time,” he mentioned. “We consider AI fashions primarily based on the necessities set forth in laws such because the EU AI Act or the AI Hiring Bias Law in NYC – NYC Local Law 144 – and different state laws equivalent to Colorado’s proposed AI quantitative testing regulation or New York’s Insurance Circular Are. Use of AI in underwriting or pricing. We are additionally ready to conduct the required assessments as different rising laws come into power, equivalent to Canada’s AI and Data Act.

Armila, which launches protection in late 2023, backed by carriers Swiss Re, Greenlight Re and Chaucer, claims it has 10 or extra prospects, together with a healthcare firm that processes medical information. GenAI is being carried out for. Ramakrishnan instructed me that Armila’s buyer base is rising 2x month-on-month from This autumn 2023 onwards.

“We are serving two main audiences: enterprises and third-party AI vendors,” Ramakrishnan mentioned. “Enterprises use our warranties to determine protections for third-party AI distributors they’re buying. Third-party sellers use our guarantee as a seal of approval that their product is dependable, which helps shorten their gross sales cycles.

Warranty is intuitive for AI. But I wonder if Armilla will be capable of sustain with a quickly altering AI coverage (for instance) New York City’s hiring algorithm bias legislationThe i’ve actand many others.), which may put it on the hook for large payouts if its assessments – and contracts – aren’t bulletproof.

Ramakrishnan brushed apart this concern.

“Regulation is rapidly evolving independently in many jurisdictions,” he mentioned, “and it will be important to understand the nuances of the law around the world.” There isn’t any ‘one-size-fits-all’ that we will implement as a worldwide normal, so we have to piece this collectively. “It’s challenging – but it also has the benefit of creating a ‘moat’ for us.”

Armila – primarily based in Toronto, with 13 staff – just lately raised $4.5 million in a seed spherical led by Mistral (to not be confused with AI Startup of the identical identify) with participation from Greycroft, Differentiated Venture Capital, Mozilla Ventures, Betaworks Ventures, MS&AD Ventures, 630 Ventures, Morgan Creek Digital, Y Combinator, Greenlight Ray and Chaucer. Taking his complete raised to $7 million, Ramakrishnan mentioned the quantity will probably be used to develop Armila’s current guarantee providing in addition to introduce new merchandise.

“Insurance will play the biggest role in addressing AI risk, and Armila is at the forefront of developing insurance products that will allow companies to safely deploy AI solutions,” Ramakrishnan mentioned.

(TagstoTranslate)AI(T)Armila(T)Funding(T)ZENAI(T)Insurance(T)Mistral(T)Startup(T)Warranty