Adobe claims its new picture era mannequin is the most effective but

5 min readFirefly, Adobe’s household of generative AI fashions, would not have the most effective popularity amongst creatives.

The Firefly picture era mannequin specifically has been ridiculed underwhelming And flawed Compared to mid journeyOpenAI FROM-E3, and different opponents, with a bent to distort organs and eventualities and miss nuances in indicators. But Adobe is attempting to proper the ship with its third-generation mannequin, Firefly Image 3, being launched in the course of the firm’s MAX London convention this week.

The mannequin, now obtainable in Photoshop (beta) and Adobe’s Firefly net app, produces extra “realistic” imagery than its predecessor (Image 2) and the predecessor of its predecessor (Image 1) due to the flexibility to know longer, extra complicated indicators and sequences, in addition to higher lighting and textual content era capabilities. Adobe says it ought to extra precisely render issues like typography, iconography, raster photos, and line artwork, and it ought to be capable to depict dense crowds and folks with “detailed features” and “a variety of moods and expressions.” is “significantly” extra environment friendly.

For what it is price, in my temporary unscientific check, Image 3 does Seems to be a step up from Image 2.

I wasn’t in a position to strive Image 3 myself. But Adobe PR despatched some outputs and indicators from the mannequin, and I managed to run those self same indicators by means of Image 2 on the net to get samples to match the Image 3 output. (Note that the Image 3 output might have been cherry-picked.)

Note the lighting on this headshot of Image 3 in comparison with Image 2 beneath:

From Image 3. Hint: “Studio portrait of young woman.”

Same sign as above from Image 2.

The Image 3 output seems extra detailed and lifelike to my eyes, with shading and distinction that’s largely absent from the Image 2 pattern.

Here is a set of photos that illustrate an understanding of the scene in Image 3:

From Image 3. Prompt: “An artist sitting at a desk in his studio looking pensive with lots of paintings and ether.”

Same signal as above. From Image 2.

Note that the pattern in Image 2 is kind of primary in comparison with the output of Image 3 by way of degree of element and general expressiveness. Image 3 reveals the topic within the pattern shirt (across the waist space) fidgeting with the topic, however the pose is extra complicated than that of the topic in Image 2. (And the garments in Image 2 are additionally a bit off.)

No doubt a number of the enhancements in Image 3 may be detected from bigger and extra numerous coaching knowledge units.

Like Image 2 and Image 1, Image 3 is educated upon add Adobe Stock, Adobe’s royalty-free media library, with licensed and public area content material for which the copyright has expired. Adobe inventory grows on a regular basis, and in consequence the obtainable coaching knowledge units additionally develop.

In an effort to keep away from lawsuits and place itself as a extra “ethical” various to generic AI distributors that practice blindly on photos (e.g. OpenAI, MidJourney), Adobe has developed an AI platform for coaching knowledge units. There is a program for paying inventory contributors. (We will word that the phrases of this system are quite opaqueHowever.) Controversially, Adobe additionally trains the Firefly mannequin on AI-generated photos, which some take into account a type of knowledge laundering.

current bloomberg Reporting This could possibly be a troubling prospect contemplating the AI-generated photos revealed in Adobe Stock weren’t excluded from the coaching knowledge of the Firefly image-generating mannequin. Reviving copyrighted materials, Adobe has defended this observe, claiming that AI-generated photos make up solely a small portion of its coaching knowledge and bear a moderation course of to make sure that they don’t comprise emblems or recognizable characters or reference artists. Does not depict names.

Of course, neither numerous, extra “ethically” sourced coaching knowledge nor content material filters and different safety measures assure a very guilt-free expertise – see customers generate folks tossing the chook With picture 2. The actual check of Image 3 will likely be when the group takes it into their very own arms.

New AI-powered options

Image 3 gives many new options in Photoshop past superior text-to-image.

A brand new “style engine” in Image 3, together with a brand new auto-stylization toggle, permits the mannequin to generate a wider vary of colours, backgrounds, and topic poses. They feed in a reference picture, an choice that lets customers situation the mannequin on a picture whose colours or tones they need to align their future generated content material with.

Three new generative instruments – Generate Background, Create Similars and Enhance Detail – make the most of Image 3 to carry out exact edits on photos. (Self-descriptive) Generate Background Replaces a generated background that blends into an current picture, whereas Generate Similar gives variations on a particular a part of a photograph (e.g. an individual or object). As far as element enhancement goes, it “fine-tunes” photos to enhance sharpness and readability.

If these options sound acquainted, it is as a result of they have been in beta within the Firefly Web App for at the least a month (and MidJourney for even longer). This marks their Photoshop debut – in beta.

Speaking of net apps, Adobe is not ignoring this various route for its AI instruments.

With the discharge of Image 3, Firefly Web Apps are getting Structure Reference and Style Reference, which Adobe is introducing as new methods to “further extend creative control.” (Both have been introduced in March, however they’re changing into extensively obtainable now.) With construction reference, customers can generate new photos that match the “structure” of a reference picture—e.g., a race Direct view of the automotive. Style referencing is basically model switch by one other title, preserving the content material of a picture (e.g. elephant in African safari) whereas copying the model (e.g. pencil sketch) of a goal picture.

Here’s the construction reference in motion:

authentic picture.

Transformed with construction reference.

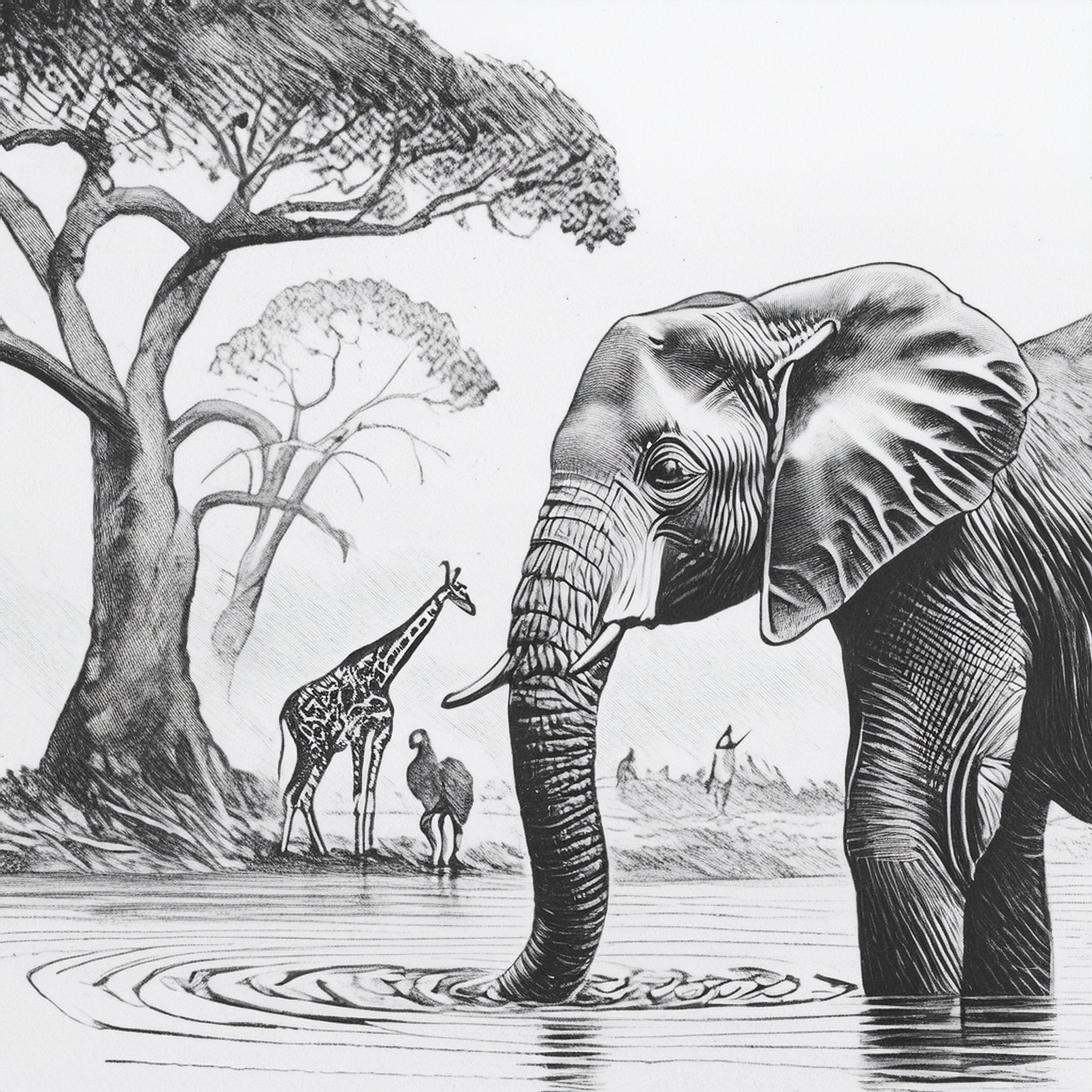

And model references:

authentic picture.

Transformed with model reference.

I requested Adobe if with all of the upgrades, Firefly Image Creation pricing will change. Currently, the most cost effective Firefly Premium plan is $4.99 monthly – undercutting competitors like MidJourney ($10 monthly) and OpenAI (which trails DALL-E 3 at $20 monthly). chatgpt plus Contribution).

Adobe stated its present ranges will nonetheless stay in place with generative credit score system, It additionally stated that its indemnification coverage, which states that Adobe pays copyright claims associated to works generated in Firefly, is not going to change, nor will it change its method to watermarking AI-generated content material. Content credentials – metadata to determine AI-generated media – will likely be mechanically linked to all Firefly picture generations on the net and in Photoshop, whether or not generated from scratch or partially edited utilizing generator options.

(TagstoTranslate)Adobe(T)Firefly(T)Generative AI(T)Image Generation