Google’s new Gemini mannequin can analyze an hour-long video – however just a few individuals can use it

7 min readLast October, a analysis paper revealed Led by a Google knowledge scientist, Databricks CTO Matei Zaharia and UC Berkeley professor Peter Abeel introduced a solution to permit GenAI fashions – that’s, fashions alongside the strains of OpenAI GPT-4 And chatgpt – It was doable to seize way more knowledge than earlier than. In the examine, the co-authors demonstrated that, by eradicating a serious reminiscence bottleneck for AI fashions, they might allow the mannequin to course of hundreds of thousands of phrases versus a whole bunch of 1000’s—the utmost of a few of the most succesful fashions on the time. Is.

It appears that AI analysis is shifting ahead quickly.

Today, Google introduced the discharge of its latest member, Gemini 1.5 Pro Gemini GenAI household of fashions. Designed as a drop-in alternative for Gemini 1.0 Pro (which was beforehand known as “Gemini Pro 1.0” for causes identified to Google’s labyrinthine advertising arm), Gemini 1.5 Pro improves on its predecessor in a number of areas. Is higher in, maybe most significantly within the quantity of information it might probably course of.

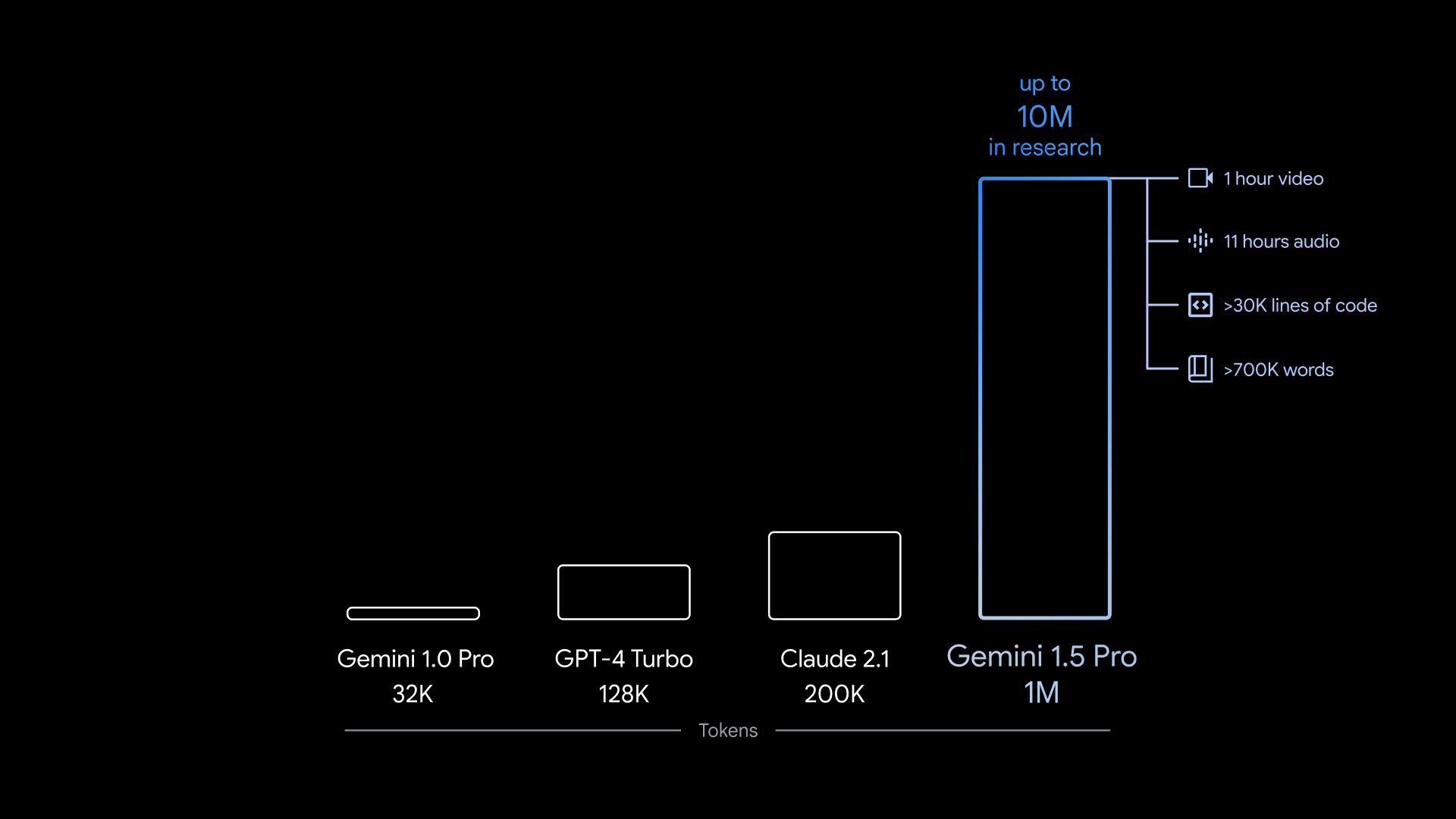

Gemini 1.5 Pro can deal with ~700,000 phrases, or ~30,000 strains of code – 35 instances as a lot as Gemini 1.0 Pro can deal with. And – as a result of the mannequin is multimodal – it isn’t restricted to textual content. The Gemini 1.5 Pro can seize as much as 11 hours of audio or one hour of video in numerous languages.

Image Credit: Google

To be clear, that is the higher restrict.

The model of Gemini 1.5 Pro accessible to most builders and prospects beginning immediately (in restricted preview) can solely course of ~100,000 phrases at a time. Google is marking the big-data-input Gemini 1.5 Pro as “experimental,” permitting solely permitted builders to run it by the corporate’s GenAI dev software as a part of a personal preview. AI Studio, Many prospects are utilizing Google Vertex AI The platform additionally has entry to the big-data-input Gemini 1.5 Pro – however not for everybody.

Nevertheless, vp of analysis at Google DeepMind Oriol Vinyals known as it an achievement.

“When you interact with a (GenAI) model, the information you are inputting and outputting becomes the context, and the longer and more complex your queries and interactions become, the more the model is going to be able to deal with it.” The longer the reference goes.” Vinals stated throughout a press convention. “We have unlocked the long context at a much larger scale.”

huge occasion

A mannequin’s context, or context window, refers back to the enter knowledge (for instance textual content) that the mannequin considers earlier than producing output (for instance extra textual content). A easy query – “Who won the 2020 US presidential election?” – Can function a reference, comparable to a film script, electronic mail or e-book.

Models with small context home windows additionally “forget” the content material of current conversations, inflicting them to deviate from the subject – typically in problematic methods. This isn’t essentially the case with fashions with bigger contexts. As an additional benefit, larger-context fashions can higher perceive the narrative move of the information they draw from and generate extra contextually wealthy responses – a minimum of hypothetically.

Other makes an attempt and experiments have been made on fashions with unusually giant reference home windows.

AI Startup Magic Claimed A big language mannequin (LLM) with a 5 million-token context window was developed final summer season. Two letters Model architectures expanded final yr had been apparently able to scaling to at least one million tokens – and past. (“Tokens” are subdivided items of uncooked knowledge, such because the syllables “fan,” “toss,” and “tick” within the phrase “fantastic.”) And just lately, a bunch of scientists at Meta, MIT, and Carnegie Mellon One has developed. Technique They say that the mannequin utterly removes the constraint on the context window dimension.

But Google is the primary to make a commercially accessible mannequin with a context window of this dimension, spanking Previous chief Anthropic’s 200,000-token reference window – if any non-public preview is taken into account commercially accessible.

Image Credit: Google

Gemini 1.5 Pro’s most context window is 1 million tokens, and the extra extensively accessible model of the mannequin has a 128,000-token context window, the identical as OpenAI. GPT-4 Turbo,

So what can one obtain with a 1 million-token context window? Google guarantees lots of issues – like analyzing total code libraries, “reasoning” over lengthy paperwork like contracts, having lengthy conversations with chatbots, and analyzing and evaluating content material in movies.

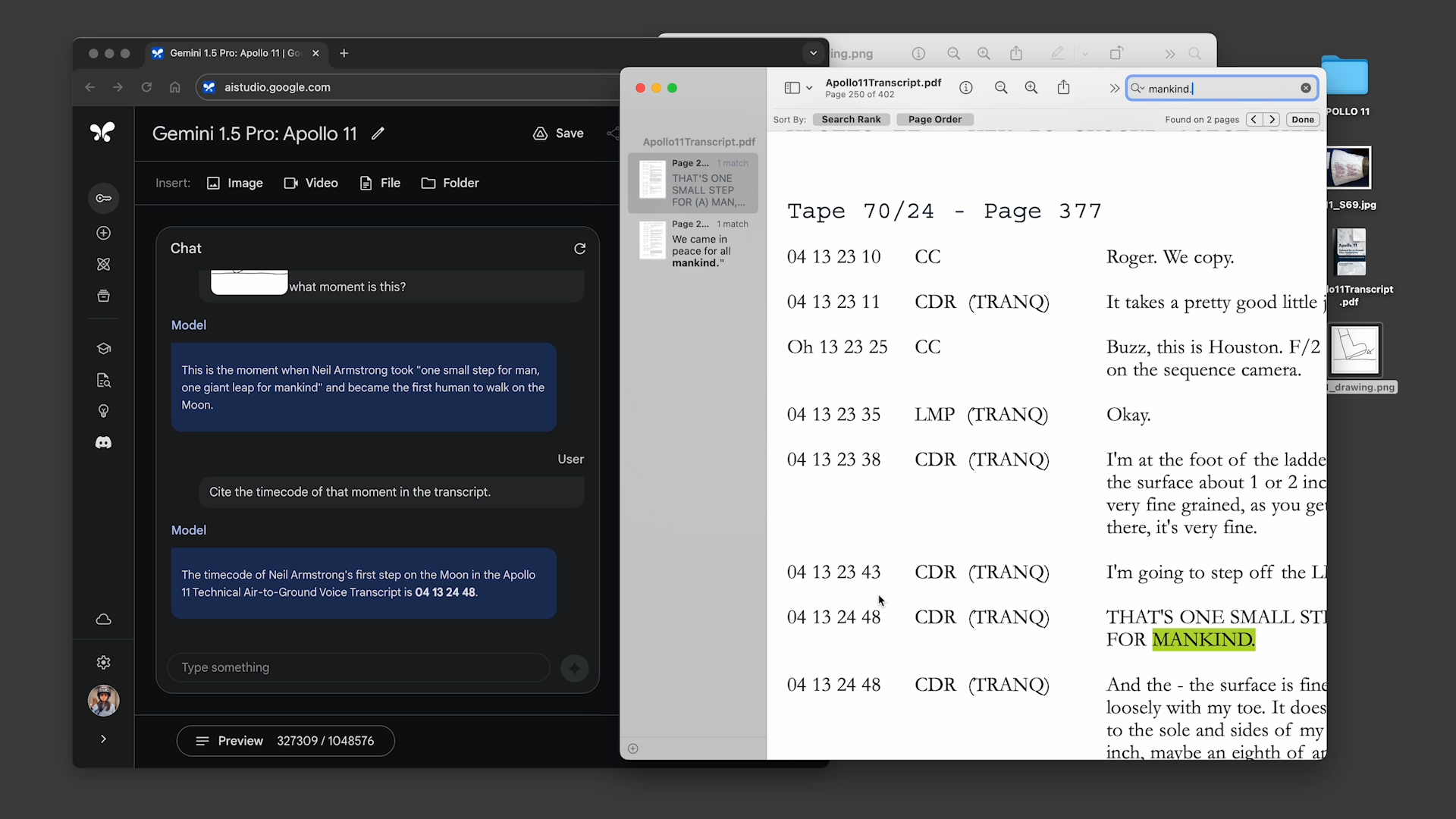

During the briefing, Google confirmed two pre-recorded demos of Gemini 1.5 Pro with a 1 million-token context window enabled.

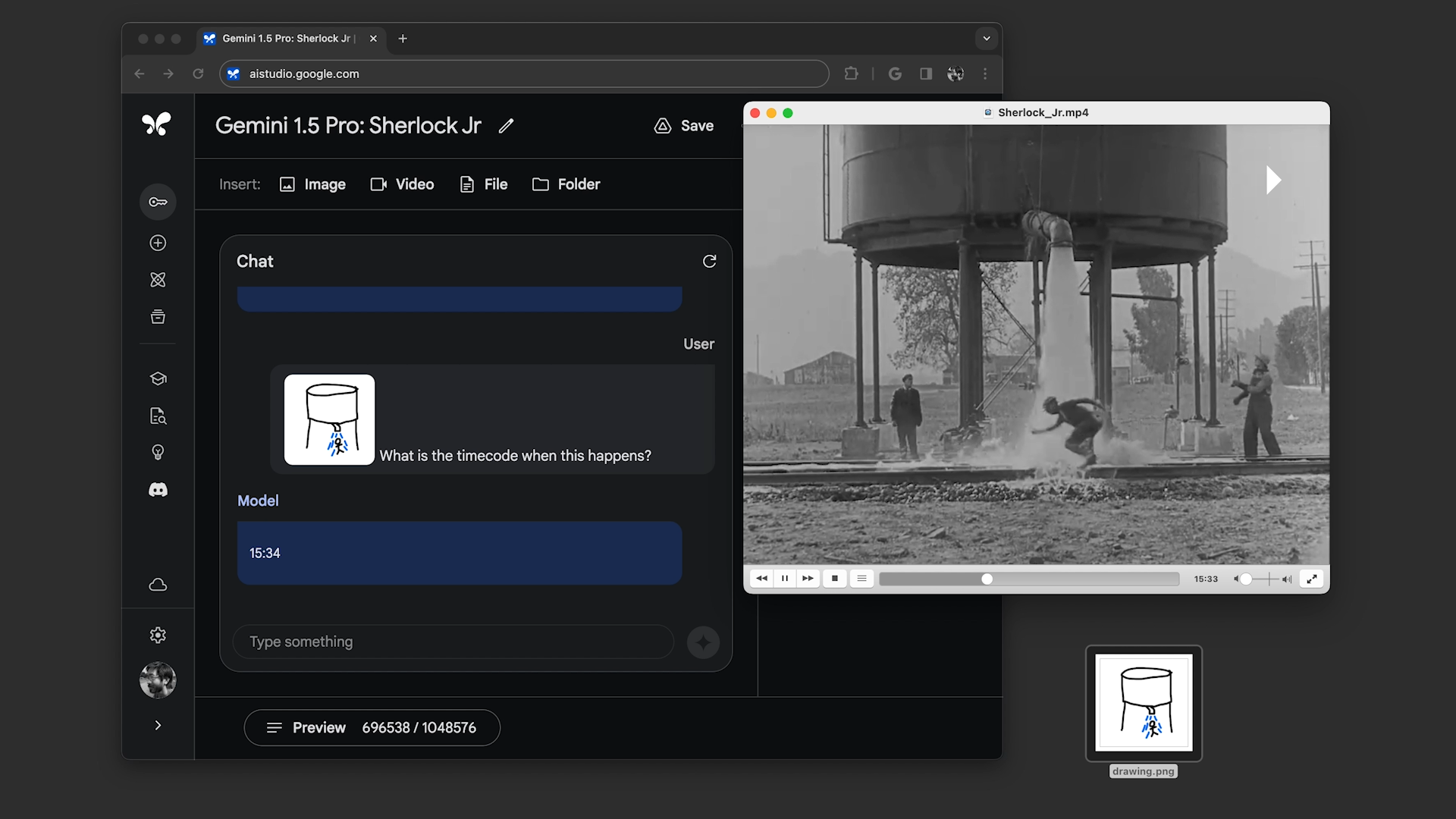

First, the demonstrator requested the Gemini 1.5 Pro to go looking a replica of the Apollo 11 moon touchdown telecast – which is roughly 402 pages lengthy – for quotes containing jokes, after which to discover a scene within the telecast that resembled a pencil sketch. was seen . In one other, the demonstrator requested the mannequin to seek for scenes within the Buster Keaton movie “Sherlock Jr.”, primarily based on an outline and one other sketch.

Image Credit: Google

The Gemini 1.5 Pro efficiently accomplished all of the duties requested of it, however not notably rapidly. Each took wherever from ~20 seconds to a minute to course of – say, for much longer than the typical ChatGPT question.

Image Credit: Google

Vinyals says latency will enhance because the mannequin is optimized. The firm is already testing a model of Gemini 1.5 Pro 10-million-tokens Reference window.

“The latency aspect (is something) we’re working to optimize — it’s still in an experimental phase, in a research phase,” he stated. “So I would say these issues exist just like any other model.”

Me, I’m unsure latency might be enticing to many individuals – a lot much less to paying prospects. Waiting for minutes at a time to discover a video does not appear nice – or very scalable within the close to future. And I fear about how latency manifests in different purposes like chatbot conversations and analyzing codebases. The vinyls did not say that – so that does not encourage a lot confidence.

My extra optimistic colleague Frederic Lardinois identified that total It could also be well worth the thumbs as much as save time. But I feel it’s going to largely depend upon the use case. To select plot factors of a present? Probably not. But relating to discovering the proper screengrab from a film scene, all you possibly can keep in mind is a blur? Perhaps.

different enhancements

In addition to the expanded context window, Gemini 1.5 Pro additionally brings different upgrades to high quality of life.

Google claims that – by way of high quality – Gemini 1.5 Pro is “comparable” with the present model of Gemini Ultra, Google’s flagship GenAI mannequin, because of a brand new structure consisting of smaller, specialised “expert” fashions. Gemini 1.5 Pro basically divides duties into a number of sub-tasks after which assigns them to the suitable skilled mannequin, which decides primarily based by itself predictions which job to assign.

MoE isn’t new – it has existed in some kind or the opposite for years. But its effectivity and suppleness have made it an more and more widespread selection amongst mannequin distributors (see:). Sample Powering Microsoft’s language translation companies).

Now, “comparable quality” is a little bit of a imprecise descriptor. Quality the place GenAI fashions are involved, particularly multimodel fashions, is tough to measure – this goes doubly so when the fashions are positioned behind a personal preview that excludes the press. For what it is price, Google claims the Gemini 1.5 Pro performs at “roughly the same level” because the Ultra on benchmarks utilized by the corporate. While develop llm Gemini 1.0 Pro is performing higher on 87% of them Benchmark. ,I’d word that the higher performing one is the Gemini 1.0 Pro low bar,

Price is an enormous query mark.

Google says that in the course of the non-public preview, use of Gemini 1.5 Pro with a 1-million-token context window might be free. But the corporate is planning to introduce Pricing tiers will begin at a normal 128,000 reference window and enhance to 1 million tokens within the close to future.

I’ve to think about the bigger reference window will not be low-cost — and Google did not allay fears by selecting to not disclose pricing in the course of the briefing. If pricing is in keeping with anthropic, it may price $8 per million immediate tokens and $24 per million generated tokens. But maybe it will likely be much less; Strange issues have occurred! We must wait and see.

I additionally marvel concerning the implications for the remainder of the fashions within the Gemini household, primarily the Gemini Ultra. Can we anticipate the Ultra mannequin improve to be broadly in keeping with the Pro improve? Or will there at all times be – as there’s now – a wierd interval the place the accessible Pro fashions are performing higher than the Ultra fashions, which Google continues to be advertising as the highest line in its Gemini portfolio?

If you are feeling charitable, deal with teething points. If you are not, inform it like it’s: extraordinarily complicated.

(TagstoTranslate)AI(T)ContextWindow(T)Gemini(T)Gemini 1.5 Pro(T)GenAI(T)Generative AI(T)Google(T)Google Gemini