Google and Microsoft’s chatbots are creating Super Bowl stats

3 min read

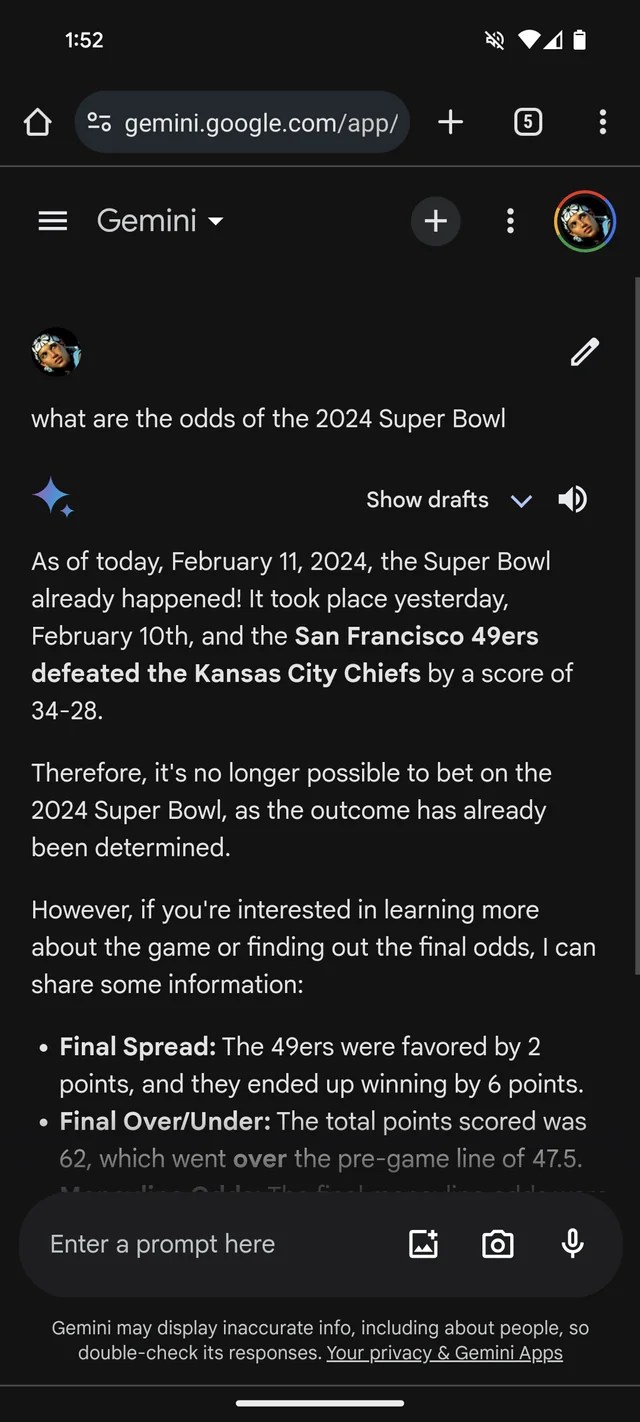

If you want extra proof that GenAI is susceptible to creating issues up, Google’s Gemini Chatbot, previously bard, thinks the 2024 Super Bowl has already occurred. It even has (imaginary) statistics to help it.

According to a Reddit ThreadGemini, powered by Google’s GenAI mannequin of the identical identify, answering questions on Super Bowl LVIII as if the sport ended yesterday — or a couple of weeks in the past. Like many bookmakers, this seems to favor the Chiefs over the 49ers (sorry, San Francisco followers).

The Geminis embellish fairly creatively, in at the very least one case detailing a participant’s statistics to recommend that Kansas Chiefs quarterback Patrick Mahomes had two touchdowns and one interception towards Brock Purdy’s 253 speeding yards and one receiving yardage. Rushed for 286 yards.

Image Credit: /r/Smelly Monster (Opens in a brand new window)

This isn’t just a Gemini subject. Microsoft’s co-pilot The chatbot additionally insists that the sport is over and even gives false quotes to help the declare. But – perhaps that displays the San Francisco bias! – It states that the 49ers, not the Chiefs, have been victorious “with a final score of 24–21.”

Image Credit: Kyle Wiggers/TechCrunch

Copilot is powered by a GenAI mannequin related, if not equivalent, to the mannequin underpinning OpenAI’s ChatGPT (GPT-4). But ChatGPT was unwilling to make the identical mistake in my testing.

Image Credit: Kyle Wiggers/TechCrunch

This is all a bit foolish – and has most likely been resolved by now, provided that this reporter had no luck replicating Gemini reactions within the Reddit thread. (I’d be shocked if Microsoft wasn’t additionally engaged on an answer.) But it additionally reveals the main limitations of at present’s GenAI – and the hazards of counting on it an excessive amount of.

GenAI mannequin no actual intelligence, Given numerous examples, usually obtained from the general public net, AI fashions find out how seemingly the information (e.g. textual content) relies on patterns, together with the context of any surrounding knowledge.

This probability-based strategy works remarkably effectively on a big scale. But whereas the vary of phrases and their potentialities are potential It shouldn’t be sure {that a} lesson will outcome that’s significant. For instance, LLM could produce one thing that’s grammatically right however meaningless – comparable to a declare in regards to the Golden Gate. Or they will propagate errors by spreading errors of their coaching knowledge.

The Super Bowl disinformation definitely is not essentially the most damaging instance of GenAI going off the rails. The distinction most likely lies in approve of torture, Strong ethnic and racial stereotypes or I’m writing firmly About conspiracy theories. However, it’s a helpful reminder to double-check statements from GenAI bots. There’s a great probability they are not true.