Geometry problems can now be solved by DeepMind’s latest AI

4 min readDeepMind, the research and development lab for AI at Google, suggests that discovering novel methods to solve complex geometry problems could be the key to enhancing the capabilities of AI systems.

DeepMind has recently introduced AlphaGeometry, a system that has the ability to solve a significant number of geometry problems, comparable to those solved by a skilled mathematician who has won a gold medal at the International Mathematical Olympiad. This system, which has been made available to the public, is capable of solving 25 Olympiad geometry problems in the designated time frame, surpassing the performance of the previous leading system, which could only solve 10 problems.

According to Google AI research scientists Trieu Trinh and Thang Luong, tackling geometry problems at the Olympiad level is a crucial step in honing profound mathematical reasoning skills that will pave the way for more advanced and versatile AI systems. In their blog post released this morning on DeepMind’s website, they express their aspiration for AlphaGeometry to usher in novel opportunities in the fields of mathematics, science, and AI.

The reason for the emphasis on geometry is explained by DeepMind, who claims that the process of proving mathematical theorems and providing logical explanations for their truth (such as the Pythagorean theorem) requires both the skill of reasoning and the ability to select from various potential steps towards a solution. This approach to problem solving, if proven correct by DeepMind, could potentially be beneficial in the development of all-purpose artificial intelligence systems in the future.

According to the press materials shared with TechCrunch from DeepMind, the task of proving the validity of a conjecture is a challenge for even the most advanced AI systems currently. This achievement signifies the capability of logical reasoning and the potential to uncover new information, making it a significant milestone in the pursuit of this objective.

Teaching an artificial intelligence (AI) system how to solve geometry problems presents its own set of difficulties.

Due to the intricacies involved in converting proofs into a format that can be comprehended by machines, there is a shortage of suitable geometry training data. Furthermore, although modern generative AI models excel at recognizing patterns and connections in data, they often lack the capability to logically deduce through theorems.

The approach taken by DeepMind consisted of two parts.

Image Credits to DeepMind

Image Credits to DeepMind

The lab developed AlphaGeometry by combining a “neural language” model, similar to ChatGPT, with a “symbolic deduction engine.” This engine utilizes mathematical rules to derive solutions to problems. While symbolic engines can be slow and rigid, particularly when handling complex or extensive datasets, DeepMind addressed these challenges by using the neural model to direct the deduction engine towards potential solutions for geometry problems.

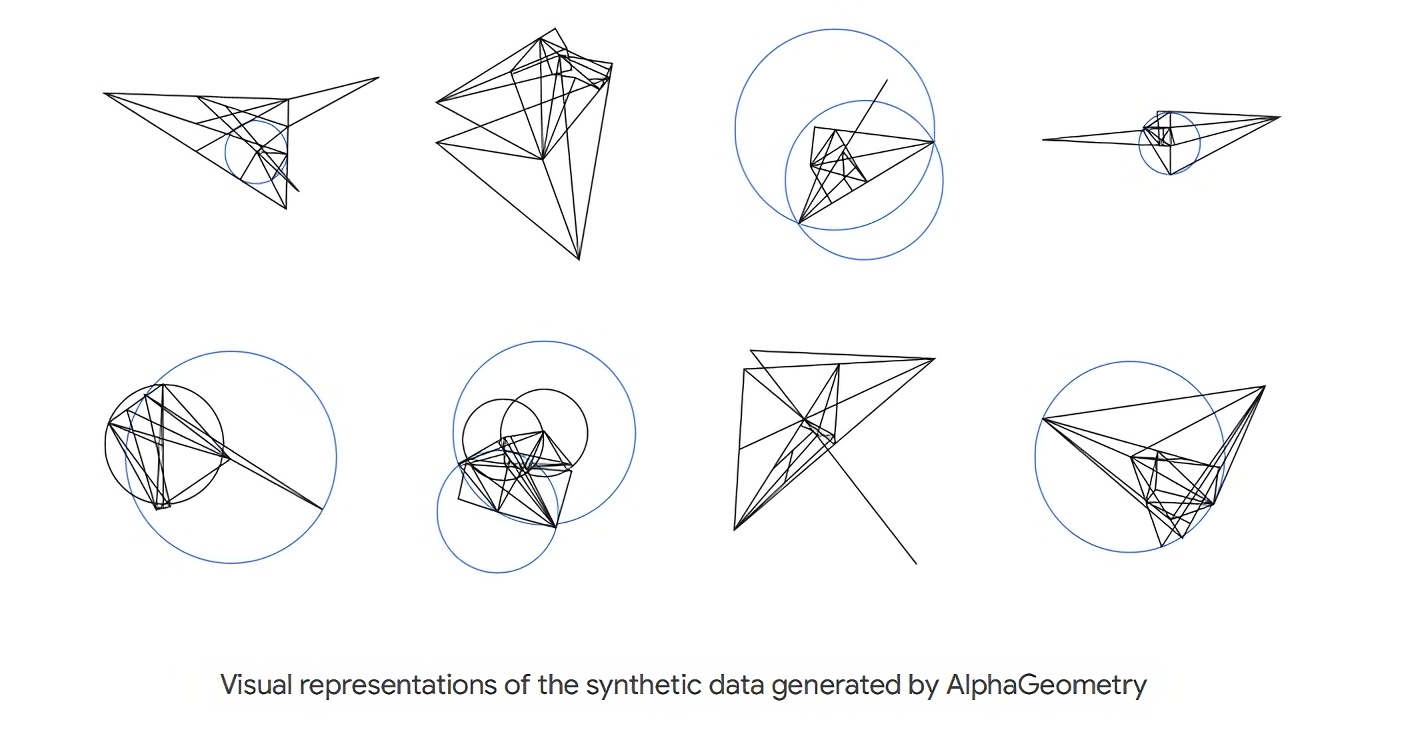

Instead of using pre-existing data for training, DeepMind opted to generate their own simulated data by creating 100 million “synthetic theorems” and corresponding proofs of different levels of complexity. They then proceeded to train AlphaGeometry entirely on the synthetic data and tested its performance on geometry problems from the Olympiad.

Problems in Olympiad geometry involve the use of diagrams which require the addition of various “constructs” before they can be solved, such as points, lines, or circles. Using its neural model, AlphaGeometry is able to anticipate which constructs may be necessary to add in order to solve the problem. These predictions are then used by AlphaGeometry’s symbolic engine to make deductions about the diagrams and determine similar solutions.

According to Trinh and Luong, AlphaGeometry’s language model is capable of generating effective recommendations for new constructs in Olympiad geometry problems by studying numerous instances where these constructs have been used to prove solutions. This system offers both rapid and intuitive ideas, as well as slower and more rational decision-making.

According to a recent study published in the journal Nature by AlphaGeometry, their problem-solving results are expected to contribute to the ongoing discussion on whether AI systems should be based on symbol manipulation, which involves manipulating knowledge-representing symbols using rules, or on the seemingly more brain-like neural networks.

According to supporters of the neural network method, the ability to behave intelligently, such as recognizing speech and generating images, can arise solely from vast quantities of data and computing power. In contrast to symbolic systems that rely on sets of rules to solve tasks, like editing a line in a word processing program, neural networks rely on statistical approximation and learning from examples to solve tasks.

According to proponents of symbolic AI, while neural networks play a crucial role in advanced AI systems such as OpenAI’s DALL-E 3 and GPT-4, they are not the ultimate solution. Symbolic AI may have an advantage in effectively storing the world’s information, navigating through intricate situations, and providing a logical explanation for its conclusions.

AlphaGeometry, a hybrid system that combines both symbol manipulation and neural networks, is similar to DeepMind’s AlphaFold 2 and AlphaGo. This demonstrates that the combination of these two approaches may be the most effective way to advance in the pursuit of generalizable AI. Perhaps.

The ultimate objective of our work is to create AI systems that have the ability to apply their knowledge across various mathematical domains. This involves developing advanced problem-solving and reasoning capabilities, which will be crucial for the success of general AI systems. In addition, our efforts will also contribute to expanding the boundaries of human understanding. By adopting this approach, we aim to influence the way future AI systems discover new knowledge in the realm of mathematics and beyond.